Health Promotion Perspectives. 13(3):183-191.

doi: 10.34172/hpp.2023.22

Systematic Review

Exploring the role of ChatGPT in patient care (diagnosis and treatment) and medical research: A systematic review

Ravindra Kumar Garg Conceptualization, Supervision, Writing – original draft, 1, *

Vijeth L Urs Formal analysis, Software, 1

Akshay Anand Agarwal Formal analysis, Visualization, 2

Sarvesh Kumar Chaudhary Data curation, Software, 1

Vimal Paliwal Data curation, Writing – review & editing, 3

Sujita Kumar Kar Validation, Writing – review & editing, 4

Author information:

1Department of Neurology, King George’s Medical University, Lucknow, India

2Department of Surgery, King George’s Medical University, Lucknow, India

3Department of Neurology, Sanjay Gandhi Institute of Medical Sciences, Lucknow, India

4Department of Psychiatry, King George’s Medical University, Lucknow, India

Abstract

Background:

ChatGPT is an artificial intelligence based tool developed by OpenAI (California, USA). This systematic review examines the potential of ChatGPT in patient care and its role in medical research.

Methods:

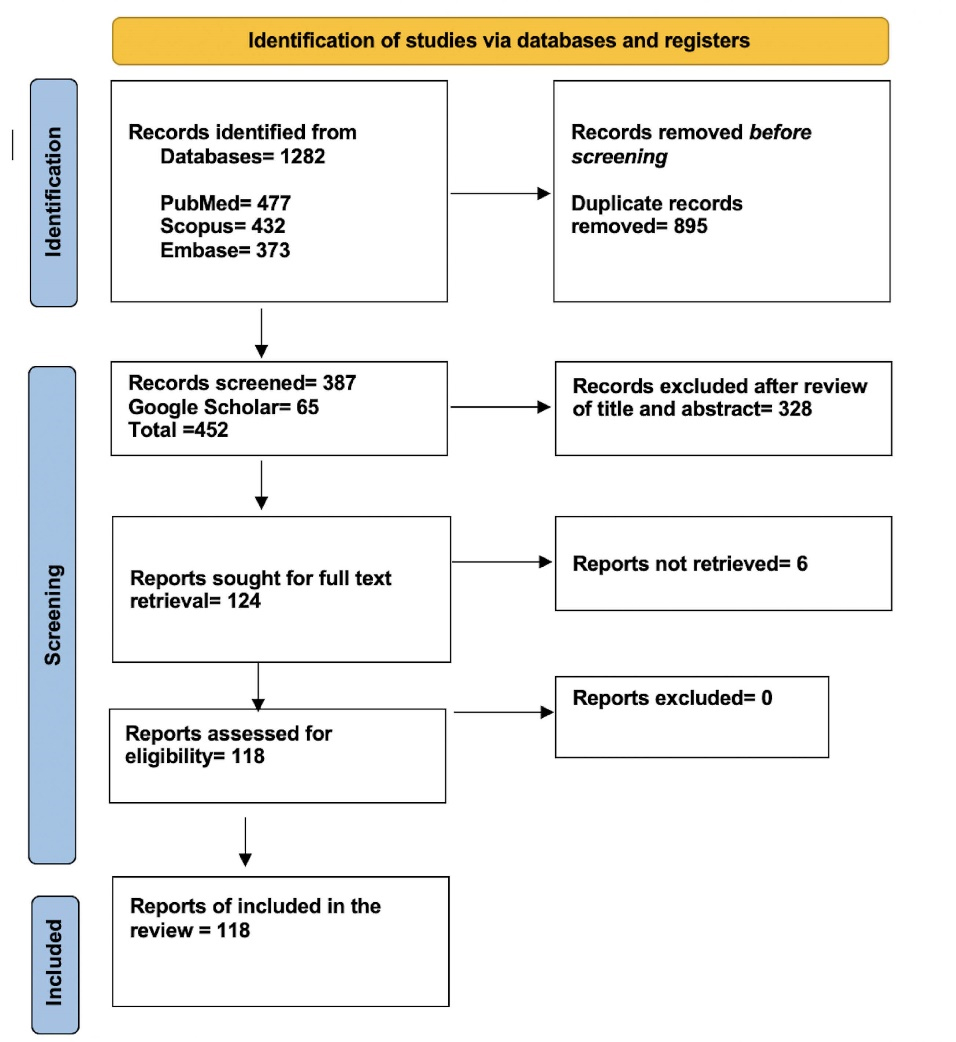

The systematic review was done according to the PRISMA guidelines. Embase, Scopus, PubMed and Google Scholar data bases were searched. We also searched preprint data bases. Our search was aimed to identify all kinds of publications, without any restrictions, on ChatGPT and its application in medical research, medical publishing and patient care. We used search term "ChatGPT". We reviewed all kinds of publications including original articles, reviews, editorial/ commentaries, and even letter to the editor. Each selected records were analysed using ChatGPT and responses generated were compiled in a table. The word table was transformed in to a PDF and was further analysed using ChatPDF.

Results:

We reviewed full texts of 118 articles. ChatGPT can assist with patient enquiries, note writing, decision-making, trial enrolment, data management, decision support, research support, and patient education. But the solutions it offers are usually insufficient and contradictory, raising questions about their originality, privacy, correctness, bias, and legality. Due to its lack of human-like qualities, ChatGPT’s legitimacy as an author is questioned when used for academic writing. ChatGPT generated contents have concerns with bias and possible plagiarism.

Conclusion:

Although it can help with patient treatment and research, there are issues with accuracy, authorship, and bias. ChatGPT can serve as a "clinical assistant" and be a help in research and scholarly writing.

Keywords: Artificial intelligence, Machine learning, Authorship, Publishing, Scholarly

Copyright and License Information

© 2023 The Author(s).

This is an open access article distributed under the terms of the Creative Commons Attribution License (

http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Introduction

ChatGPT (Chat Generative Pre-trained Transformer) is an artificial intelligence (AI) based on a natural language processing tool developed by OpenAI (California, USA). ChatGPT is chat boat based technology. A chatbot is in fact a type of software creates text akin to human-like conversation. ChatGPT has the capacity to respond to follow-up questions, recognise errors, debunk unfounded theories, and turn down inappropriate requests.large language models (LLMs), which are frequently abbreviated as LLMs, are extremely complex deep-learning programmes that are capable of comprehending and producing text in a manner that is strikingly comparable to that of humans.LLMs can recognise, summarise, translate, predict, and create text as well as other sorts of information by using the large knowledge base they have amassed from massive datasets.1-4

The possible uses of ChatGPT in medicine is currently under intense investigation. ChatGPT is considered to have enormous capability in helping experts with clinical and laboratory diagnosis to planning and execution of medical research.5,6 Another significant use of ChatGPT in medical researchers is the creation of virtual assistants to physicians helping them in writing manuscripts in more efficient way.7 Usage of ChatGPT in medical writing is considered to have associated with several ethical and legal issues. Possible copyright violations, medical-legal issues, and the demand for openness in AI-generated content are a few of these.8-12

The accuracy of ChatGPT in producing trustworthy health information, the ethical and legal ramifications, the interpretability of AI decisions, the potential for bias, the integration with healthcare systems, professional AI literacy, patient perspectives, and data privacy issues are some of the key knowledge gaps about ChatGPT’s role in medical research and clinical practise.13,14 In this systematic review we aimed to review published article and explore the potential of ChatGPT in facilitating patient care, medical research and medical writing. We will also focus on ethical issues associated with usage of ChatGPT.

Materials and Methods

We performed a systematic review of published articles on ChatGPT. The protocol of the systematic review was registered with PROSPERO (CRD42023415845).15 Our systematic review was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines.

Search strategy

We searched four databases, PubMed, Scopus, Embase, and Google Scholar. Our search was aimed at identifying all kinds of articles on ChatGPT and its application in medical research, scholarly and clinical practice, published till 24 May 2023. Articles related to medical education was not considered. The search item that we used was “ChatGPT”. We reviewed all kinds of publications including original articles, reviews, editorial/ commentaries and even letter to the editor describing ChatGPT. We did not put any kind of restriction or limitations in our search strategy.

Data extraction

The selection of the papers that were published was done in two steps. Two reviewers (RKG and VP) reviewed the titles and abstracts in the initial phase. Two reviewers (VLU and SKC) then examined the entire texts of the chosen papers to determine their eligibility. A third author (SKC) settled any differences that arose between the two authors. Two reviewers (RKG and VP) assessed the information available in the included publication for the suitability of the article to be included in the review. Any disagreement between them was resolved by mutual agreement. If a dispute persisted, it was resolved via consultation with a third reviewer (SKC).

EndNote 20 web tool (Clarivate Analytics) was used to handle duplicate records. This process was carried out by two reviewers independently (RKG and VP). Any issue that arose was resolved with a discussion with another reviewer. The number of retrieved and assessed records at each stage was provided in the form of a PRISMA flow chart. EndNote 20 (Clarivate Analytics) was used to make a PRISMA flow chart.

The reviewers involved in this review are faculty in a leading teaching institution of India. They all have sufficient training and knowledge systematic review procedures.

Data analysis

Data analysis was done jointly by two reviewers (RKG and VP). ChatGPT was extensively used for analysing the selected records and writing this manuscript. A table was made with six columns (First author/sole author, country of origin, status of peer review (peer-reviewed or preprint), title of the paper and short point wise summary of full text. Short point wise summary of full text of each and every article was created with the help of ChatGPT. The voluminous word file was then converted to a pdf file and was processed with the sister software “ ChatPDF” (OpenAI, California, USA available at https://www.chatpdf.com/). Following questions were asked from ChatPDF.

-

What are potential role of ChatGPT in medical writing and research?

-

What could be the role of ChatGPT in clinical practice?

-

What are ethical issues associated with paper writing?

-

Can ChatGPT be an author?

-

Can ChatGPT write text in good English and free of plagiarism?

-

Role of ChatGPT so far in neurological disorders related clinical practice and research.

-

Effectiveness and efficiency of ChatGPT in medical research and clinical settings

-

Potential benefits and limitations of ChatGPT in medical research and clinical applications

-

The ethical implications of using ChatGPT in medical research and clinical practice

-

Identify the gaps in the current research on ChatGPT and suggest areas for further investigation.

-

Provide insights into the potential future applications of ChatGPT in medical research and clinical practice

-

Recommendations for researchers, clinicians, and policymakers on the use of ChatGPT in medical research and clinical practice

All the responses were compiled in a word file.

Quality assessment

Quality assessment was not done.

Results

Our data collection followed PRISMA guidelines (Table S1, Supplementary file 1) The PRISMA flowchart for our systematic review is shown in Figure 1. We reviewed 118 publications. ChatGPT related publications are available from across the globe. There were 33 original articles and rest were commentary/editorial, review articles, research letters or letter to the editors. Out of 118 articles, 18 articles were available as preprint only. Summaries of 118 articles and answers to 12 questions have been provided in form of tables.16-133 (Supplementary file 2, Table S2 and S3).

Figure 1.

The study's PRISMA flow diagram shows how articles are selected for this systematic review

.

The study's PRISMA flow diagram shows how articles are selected for this systematic review

The multiple dimensions of ChatGPT’s role in medical research include assisting with data gathering, analysis, and interpretation, assisting with scientific writing and publication editing, assisting with decision-making and treatment planning, and enhancing medical teaching and learning. In order to increase the effectiveness of data collection and processing, ChatGPT can be used to speed up procedures like patient questionnaires, interviews, and epidemiological research. Additionally, it can assist researchers in locating essential information, developing hypotheses, and analysing data—all of which will quicken the research process. In order to generate ideas, create articles, and support authors in creating clear and understandable content, ChatGPT can be utilised in scientific writing. Text summary, language editing, and proofreading, even for abstracts, can all be aided by it. To ensure quality, it’s crucial to thoroughly assess and edit the content produced by ChatGPT.

Additionally, ChatGPT can be employed as a valuable tool in clinical practice. It can assist clinicians in patient inquiries, writing medical notes and discharge summaries, and making informed decisions about treatment plans. It has the potential to serve as a personalized learning tool, encouraging critical thinking and problem-based learning among medical professionals.

While ChatGPT offers numerous benefits, there are also limitations and ethical considerations to be addressed. These include potential biases in training data, issues of accuracy and reliability, privacy concerns, questions about authorship in academic papers, and ethical implications of its use. It is crucial to establish regulations and control mechanisms to ensure the ethical utilization of ChatGPT and similar AI tools in medical research and clinical practice.

Discussion

We looked into two main uses of ChatGPT: in healthcare settings and for medical writing and research. We studied 118 articles - most were opinion pieces, commentaries, and reviews.16-133 Another group, Ruksakulpiwat et al, also did a similar study. They analyzed six articles out of 114 that met their criteria. These articles covered a variety of ways to use ChatGPT, such as finding new drugs, writing literature reviews, improving medical reports, providing medical info, bettering research methods, analyzing data, and personalizing medicine.134

Levin et al, on the other hand, conducted an analysis of the first batch of publications about ChatGPT. They found 42 articles published in 26 journals in the 69 days after ChatGPT was launched. Only one was a research article. The rest were mostly editorials and news pieces. Five publications focused on studies on ChatGPT. There were no articles on its use in Obstetrics and Gynecology. In terms of where these articles were published, Nature was the top journal. Radiology and Lancet Digital Health came next. The articles mostly discussed the quality of ChatGPT’s scientific writing, its features, and its performance. Some also talked about who should get credit for the work and ethical concerns. Interestingly, when comparing the articles that described a study to the others, the average impact factor (a measure of the influence of a journal) was significantly lower for the study articles.135

In our review, we identified several potential advantages of using ChatGPT in the medical field. It appears to enhance productivity and expedite research workflows by aiding in data organization, assisting in the selection of trial candidates, and supporting overall research activities. Furthermore, ChatGPT’s capacity to review manuscripts and contribute to editing may potentiate the efficiency of academic publishing.136 Beyond the scope of research, it could also prove beneficial for patient education, fostering scientific exploration, and shaping clinical decision-making.137 However, we also need to consider certain limitations and ethical concerns associated with the use of ChatGPT. The model, as sophisticated as it is, lacks the capability to offer comprehensive diagnoses and cannot replace the human qualities inherent to medical practice.138 Ethical issues also arise, specifically in relation to potential biases in the machine learning model and potential breaches of privacy.139,140 Moreover, while ChatGPT can process and generate information, it might not exhibit the level of originality, creativity, and critical thinking that are often required in the medical field. However, the use of ChatGPT in producing scholarly articles is raising questions in the academic publishing. While these tools can greatly enhance the clarity and fluency of written material, it is crucial that human oversight is maintained throughout the process. This is because AI can potentially produce content that is authoritative-sounding, yet it might be inaccurate, incomplete, or biased. Incorrect GPT-4 responses, known as “hallucinations,” can be harmful, particularly in the field of medicine.22,141 Therefore, it is essential to check or validate GPT-4’s output. ChatGPT can generate references to made-up research publications.142 Therefore, authors must thoroughly check and modify the output of these tools. Furthermore, it is not appropriate to recognize AI or AI-assisted tools as authors or co-authors in the by-line of publications. Instead, their use should be transparently acknowledged within the manuscript.143,144 For example, according to Elsevier’s policy on AI for authors, the responsibility and accountability for the work ultimately still lie with the human authors, despite any technological assistance they may have received.145 Authors who wish to use ChatGPT for publishing medical content should comply with the specific regulations set by the journal pertaining to AI-generated contents.

Limitations

There are certain limitations to our systematic review on ChatGPT. The search term used for the systematic review was “ChatGPT”. This could limit the search as not all articles might use this exact term when discussing or evaluating the tool. Variations such as “OpenAI’s language model”, “GPT-4”, or other related terms could have been included to increase search specificity. Our study does not consider articles related to medical education. This could limit the scope of the review, as ChatGPT might have potential applications and limitations within the field of medical education that are not captured. Our review only analyzes ChatGPT and doesn’t compare it to other AI models or tools that could be used in a similar capacity. This might limit the understanding of where ChatGPT stands relative to other comparable AI technologies. As of the date the systematic review was conducted, there may not have been many long-term original studies available regarding the use of ChatGPT in medical research and patient care. This could limit the review’s ability to provide a complete picture of the tool’s effectiveness and potential issues over time. Our review includes all kinds of publications such as editorials, letters to the editor and preprints which may not be peer-reviewed or have rigorous methodologies. This might affect the quality of evidence used for this review.

In conclusion, ChatGPT has a great potential. Its full potentials are still evolving. ChatGPT as a source of information cannot be trusted, many ethical issues are associated with it. Certainly, ChatGPT cannot be credited with authorship. However, ChatGPT is certainly a good clinical assistant. ChatGPT is nowhere near to replace human brain. Before deploying in a clinical setting, it is essential to ensure that the model can provide accurate and reliable information. The ChatGPT model should be continually updated and improved based on feedback from its users to rectify its limitations. Clear guidelines need to be developed for healthcare professionals and patients on when and how to use ChatGPT as a tool. Policies need to be created to protect patient data and privacy. Ethical guidelines should be developed to address the moral dilemmas that arise from using ChatGPT in healthcare.

Acknowledgments

The concept, data collection analysis, writing, and reporting of this article were solely done by authors. ChatGPT was extensively utilized as mentioned in the methods section.

Competing Interests

None.

Ethical Approval

None.

Funding

None.

Supplementary Files

Supplementary file 1(PRISMA check list) contains Table S1.

(pdf)

References

- OpenAI. Conversational AI Model for Medical Inquiries. ChatGPT. 2023. https://www.openai.com/chatgpt.

- Peters V, Baumgartner M, Froese S, Morava E, Patterson M, Zschocke J, et al. Risk and potential of ChatGPT in scientific publishing. J Inherit Metab Dis. 2023. 10.1002/jimd.12666

- Welsby P, Cheung BMY. ChatGPT. Postgrad Med J. 2023. 10.1093/postmj/qgad056

- Thirunavukarasu AJ, Ting DSJ, Elangovan K, Gutierrez L, Tan TF, Ting DSW. Large language models in medicine. Nat Med 2023; 29(8):1930-40. doi: 10.1038/s41591-023-02448-8 [Crossref] [ Google Scholar]

- Wei Q, Cui Y, Wei B, Cheng Q, Xu X. Evaluating the performance of ChatGPT in differential diagnosis of neurodevelopmental disorders: a pediatricians-machine comparison. Psychiatry Res 2023; 327:115351. doi: 10.1016/j.psychres.2023.115351 [Crossref] [ Google Scholar]

- Thondebhavi Subbaramaiah M, Shanthanna H. ChatGPT in the field of scientific publication - are we ready for it?. Indian J Anaesth 2023; 67(5):407-8. doi: 10.4103/ija.ija_294_23 [Crossref] [ Google Scholar]

- Lenharo M. ChatGPT gives an extra productivity boost to weaker writers. Nature. 2023. 10.1038/d41586-023-02270-9

- Scimeca M, Bonfiglio R. Dignity of science and the use of ChatGPT as a co-author. ESMO Open 2023; 8(4):101607. doi: 10.1016/j.esmoop.2023.101607 [Crossref] [ Google Scholar]

- Baker N, Thompson B, Fox D. ChatGPT can write a paper in an hour - but there are downsides. Nature. 2023. 10.1038/d41586-023-02298-x

- Cohen IG. What should ChatGPT mean for bioethics? Am J Bioeth. 2023:1-9. 10.1080/15265161.2023.2233357

- Levy M, Yeh A, Hawkes C, Lechner-Scott J, Giovannoni G. Is using ChatGPT to help write papers and grants is useful, and ethical?. Mult Scler Relat Disord 2023; 76:104873. doi: 10.1016/j.msard.2023.104873 [Crossref] [ Google Scholar]

- Bommasani R, Liang P, Lee T. Holistic evaluation of language models. Ann N Y Acad Sci 2023; 1525(1):140-6. doi: 10.1111/nyas.15007 [Crossref] [ Google Scholar]

- Tulandi T. Disclosure of artificial intelligence/ChatGPT-generated manuscripts. J Obstet Gynaecol Can 2023; 45(8):543-4. doi: 10.1016/j.jogc.2023.03.013 [Crossref] [ Google Scholar]

- Ong CWM, Blackbourn HD, Migliori GB. GPT-4, artificial intelligence and implications for publishing. Int J Tuberc Lung Dis 2023; 27(6):425-6. doi: 10.5588/ijtld.23.0143 [Crossref] [ Google Scholar]

- Garg RK, Urs VL, Agrawal AA, Chaudhary SK, Paliwal V, Kar SK. Exploring the role of Chat GPT in patient care (diagnosis and treatment) and medical research: a systematic review. medRxiv [Preprint]. June 14, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.06.13.23291311v1.

- Ali MJ, Djalilian A. Readership awareness series - paper 4: chatbots and ChatGPT - ethical considerations in scientific publications. Semin Ophthalmol 2023; 38(5):403-4. doi: 10.1080/08820538.2023.2193444 [Crossref] [ Google Scholar]

- Ali SR, Dobbs TD, Hutchings HA, Whitaker IS. Using ChatGPT to write patient clinic letters. Lancet Digit Health 2023; 5(4):e179-e81. doi: 10.1016/s2589-7500(23)00048-1 [Crossref] [ Google Scholar]

- Alser M, Waisberg E. Concerns with the usage of ChatGPT in academia and medicine: a viewpoint. Am J Med Open 2023; 9:100036. doi: 10.1016/j.ajmo.2023.100036 [Crossref] [ Google Scholar]

- Anderson N, Belavy DL, Perle SM, Hendricks S, Hespanhol L, Verhagen E. AI did not write this manuscript, or did it? Can we trick the AI text detector into generated texts? The potential future of ChatGPT and AI in Sports & Exercise Medicine manuscript generation. BMJ Open Sport Exerc Med 2023; 9(1):e001568. doi: 10.1136/bmjsem-2023-001568 [Crossref] [ Google Scholar]

- Arun Babu T, Sharmila V. Using artificial intelligence chatbots like ‘ChatGPT’ to draft articles for medical journals - advantages, limitations, ethical concerns and way forward. Eur J Obstet Gynecol Reprod Biol 2023; 286:151. doi: 10.1016/j.ejogrb.2023.05.008 [Crossref] [ Google Scholar]

- Asch DA. An interview with ChatGPT about health care. NEJM Catal Innov Care Deliv 2023; 4(2):1-8. doi: 10.1056/cat.23.0043 [Crossref] [ Google Scholar]

- Athaluri SA, Manthena SV, Kesapragada V, Yarlagadda V, Dave T, Duddumpudi RTS. Exploring the boundaries of reality: investigating the phenomenon of artificial intelligence hallucination in scientific writing through ChatGPT references. Cureus 2023; 15(4):e37432. doi: 10.7759/cureus.37432 [Crossref] [ Google Scholar]

- Balas M, Ing EB. Conversational AI models for ophthalmic diagnosis: comparison of ChatGPT and the Isabel Pro differential diagnosis generator. JFO Open Ophthalmol 2023; 1:100005. doi: 10.1016/j.jfop.2023.100005 [Crossref] [ Google Scholar]

- Barker FG, Rutka JT. Editorial. Generative artificial intelligence, chatbots, and the Journal of Neurosurgery Publishing Group. J Neurosurg. 2023:1-3. 10.3171/2023.4.jns23482

- Bauchner H. ChatGPT: not an author, but a tool. Health Affairs Forefront. 2023. 10.1377/forefront.20230511.917632

- Baumgartner C. The potential impact of ChatGPT in clinical and translational medicine. Clin Transl Med 2023; 13(3):e1206. doi: 10.1002/ctm2.1206 [Crossref] [ Google Scholar]

- Benoit JRA. ChatGPT for clinical vignette generation, revision, and evaluation. medRxiv [Preprint]. February 8, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.02.04.23285478v1.

- Bhattacharya K, Bhattacharya AS, Bhattacharya N, Yagnik VD, Garg P, Kumar S. ChatGPT in surgical practice—a New Kid on the Block. Indian J Surg. 2023. 10.1007/s12262-023-03727-x

- Biswas S. ChatGPT and the future of medical writing. Radiology 2023; 307(2):e223312. doi: 10.1148/radiol.223312 [Crossref] [ Google Scholar]

- Boßelmann CM, Leu C, Lal D. Are AI language models such as ChatGPT ready to improve the care of individuals with epilepsy?. Epilepsia 2023; 64(5):1195-9. doi: 10.1111/epi.17570 [Crossref] [ Google Scholar]

- Brainard J. Journals take up arms against AI-written text. Science 2023; 379(6634):740-1. doi: 10.1126/science.adh2762 [Crossref] [ Google Scholar]

- Cahan P, Treutlein B. A conversation with ChatGPT on the role of computational systems biology in stem cell research. Stem Cell Reports 2023; 18(1):1-2. doi: 10.1016/j.stemcr.2022.12.009 [Crossref] [ Google Scholar]

- Nasrallah HA. A ‘guest editorial’ … generated by ChatGPT?. Curr Psychiatr 2023; 22(4):6-7. doi: 10.12788/cp.0348 [Crossref] [ Google Scholar]

- Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst 2023; 47(1):33. doi: 10.1007/s10916-023-01925-4 [Crossref] [ Google Scholar]

- Chen S, Kann BH, Foote MB, Aerts HJ, Savova GK, Mak RH, et al. The utility of ChatGPT for cancer treatment information. medRxiv [Preprint]. March 23, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.03.16.23287316v1.

- Cheng K, Wu H, Li C. ChatGPT/GPT-4: enabling a new era of surgical oncology. Int J Surg 2023; 109(8):2549-50. doi: 10.1097/js9.0000000000000451 [Crossref] [ Google Scholar]

- Chervenak J, Lieman H, Blanco-Breindel M, Jindal S. The promise and peril of using a large language model to obtain clinical information: ChatGPT performs strongly as a fertility counseling tool with limitations. Fertil Steril 2023; 120(3 Pa 2):575-83. doi: 10.1016/j.fertnstert.2023.05.151 [Crossref] [ Google Scholar]

- Cifarelli CP, Sheehan JP. Large language model artificial intelligence: the current state and future of ChatGPT in neuro-oncology publishing. J Neurooncol 2023; 163(2):473-4. doi: 10.1007/s11060-023-04336-0 [Crossref] [ Google Scholar]

- Corsello A, Santangelo A. May artificial intelligence influence future pediatric research?-The case of ChatGPT. Children (Basel) 2023; 10(4):757. doi: 10.3390/children10040757 [Crossref] [ Google Scholar]

- D’Amico RS, White TG, Shah HA, Langer DJ. I asked a ChatGPT to write an editorial about how we can incorporate chatbots into neurosurgical research and patient care…. Neurosurgery 2023; 92(4):663-4. doi: 10.1227/neu.0000000000002414 [Crossref] [ Google Scholar]

- Darkhabani M, Alrifaai MA, Elsalti A, Dvir YM, Mahroum N. ChatGPT and autoimmunity - a new weapon in the battlefield of knowledge. Autoimmun Rev 2023; 22(8):103360. doi: 10.1016/j.autrev.2023.103360 [Crossref] [ Google Scholar]

- Dave M. Plagiarism software now able to detect students using ChatGPT. Br Dent J 2023; 234(9):642. doi: 10.1038/s41415-023-5868-8 [Crossref] [ Google Scholar]

- Dave T, Athaluri SA, Singh S. ChatGPT in medicine: an overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front Artif Intell 2023; 6:1169595. doi: 10.3389/frai.2023.1169595 [Crossref] [ Google Scholar]

- Day T. A preliminary investigation of fake peer-reviewed citations and references generated by ChatGPT. Prof Geogr. 2023:1-4. 10.1080/00330124.2023.2190373

- de Oliveira RS, Ballestero M. The future of pediatric neurosurgery and ChatGPT: an editor’s perspective. Arch Pediatr Neurosurg 2023; 5(2):e1912023. doi: 10.46900/apn.v5i2.191 [Crossref] [ Google Scholar]

- De Vito EL. [Artificial intelligence and ChatGPT. Would you read an artificial author?]. Medicina (B Aires) 2023;83(2):329-32. [Spanish].

- Dergaa I, Chamari K, Zmijewski P, Ben Saad H. From human writing to artificial intelligence generated text: examining the prospects and potential threats of ChatGPT in academic writing. Biol Sport 2023; 40(2):615-22. doi: 10.5114/biolsport.2023.125623 [Crossref] [ Google Scholar]

- Donato H, Escada P, Villanueva T. The transparency of science with ChatGPT and the emerging artificial intelligence language models: where should medical journals stand?. Acta Med Port 2023; 36(3):147-8. doi: 10.20344/amp.19694 [Crossref] [ Google Scholar]

- Dunn C, Hunter J, Steffes W, Whitney Z, Foss M, Mammino J. Artificial intelligence-derived dermatology case reports are indistinguishable from those written by humans: a single-blinded observer study. J Am Acad Dermatol 2023; 89(2):388-90. doi: 10.1016/j.jaad.2023.04.005 [Crossref] [ Google Scholar]

- Fatani B. ChatGPT for future medical and dental research. Cureus 2023; 15(4):e37285. doi: 10.7759/cureus.37285 [Crossref] [ Google Scholar]

- Galland J. [Chatbots and internal medicine: Future opportunities and challenges]. Rev Med Interne 2023; 44(5):209-11. doi: 10.1016/j.revmed.2023.04.001.[French] [Crossref] [ Google Scholar]

- Gandhi Periaysamy A, Satapathy P, Neyazi A, Padhi BK. ChatGPT: roles and boundaries of the new artificial intelligence tool in medical education and health research - correspondence. Ann Med Surg (Lond) 2023; 85(4):1317-8. doi: 10.1097/ms9.0000000000000371 [Crossref] [ Google Scholar]

- Gao CA, Howard FM, Markov NS, Dyer EC, Ramesh S, Luo Y, et al. Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. bioRxiv [Preprint]. December 27, 2022. Available from: https://www.biorxiv.org/content/10.1101/2022.12.23.521610v1.

- Gödde D, Nöhl S, Wolf C, Rupert Y, Rimkus L, Ehlers J, et al. ChatGPT in medical literature – a concise review and SWOT analysis. medRxiv [Preprint]. May 8, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.05.06.23289608v1.

- Gordijn B, Have HT. ChatGPT: evolution or revolution?. Med Health Care Philos 2023; 26(1):1-2. doi: 10.1007/s11019-023-10136-0 [Crossref] [ Google Scholar]

- Gottlieb M, Kline JA, Schneider AJ, Coates WC. ChatGPT and conversational artificial intelligence: friend, foe, or future of research?. Am J Emerg Med 2023; 70:81-3. doi: 10.1016/j.ajem.2023.05.018 [Crossref] [ Google Scholar]

- Graf A, Bernardi RE. ChatGPT in research: balancing ethics, transparency and advancement. Neuroscience 2023; 515:71-3. doi: 10.1016/j.neuroscience.2023.02.008 [Crossref] [ Google Scholar]

- Graham A. ChatGPT and other AI tools put students at risk of plagiarism allegations, MDU warns. BMJ 2023; 381:1133. doi: 10.1136/bmj.p1133 [Crossref] [ Google Scholar]

- Gravel J, D’Amours-Gravel M, Osmanlliu E. Learning to fake it: limited responses and fabricated references provided by ChatGPT for medical questions. medRxiv [Preprint]. March 24, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.03.16.23286914v1.

- Guo E, Gupta M, Sinha S, Rössler K, Tatagiba M, Akagami R, et al. neuroGPT-X: towards an accountable expert opinion tool for vestibular schwannoma. medRxiv [Preprint]. February 26, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.02.25.23286117v1.

- Gurha P, Ishaq N, Marian AJ. ChatGPT and other artificial intelligence chatbots and biomedical writing. J Cardiovasc Aging 2023; 3(2):20. doi: 10.20517/jca.2023.13 [Crossref] [ Google Scholar]

- Hurley D. Your AI program will write your paper now: neurology editors on managing artificial intelligence submissions. Neurol Today 2023; 23(5):10-11. doi: 10.1097/01.NT.0000923016.69043.1e [Crossref] [ Google Scholar]

- Haemmerli J, Sveikata L, Nouri A, May A, Egervari K, Freyschlag C, et al. ChatGPT in glioma patient adjuvant therapy decision making: ready to assume the role of a doctor in the tumour board? medRxiv [Preprint]. March 24, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.03.19.23287452v1.

- Harskamp RE, Clercq LD. Performance of ChatGPT as an AI-assisted decision support tool in medicine: a proof-of-concept study for interpreting symptoms and management of common cardiac conditions (AMSTELHEART-2). medRxiv [Preprint]. March 26, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.03.25.23285475v1.

- Hill-Yardin EL, Hutchinson MR, Laycock R, Spencer SJ. A ChatGPT about the future of scientific publishing. Brain Behav Immun 2023; 110:152-4. doi: 10.1016/j.bbi.2023.02.022 [Crossref] [ Google Scholar]

- Hirani R, Farabi B, Marmon S. Experimenting with ChatGPT: concerns for academic medicine. J Am Acad Dermatol 2023; 89(3):e127-e9. doi: 10.1016/j.jaad.2023.04.045 [Crossref] [ Google Scholar]

- Homolak J. Opportunities and risks of ChatGPT in medicine, science, and academic publishing: a modern Promethean dilemma. Croat Med J 2023; 64(1):1-3. doi: 10.3325/cmj.2023.64.1 [Crossref] [ Google Scholar]

- Hosseini M, Gao CA, Liebovitz D, Carvalho A, Ahmad FS, Luo Y, et al. An exploratory survey about using ChatGPT in education, healthcare, and research. medRxiv [Preprint]. April 3, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.03.31.23287979v1.

- Howard A, Hope W, Gerada A. ChatGPT and antimicrobial advice: the end of the consulting infection doctor?. Lancet Infect Dis 2023; 23(4):405-6. doi: 10.1016/s1473-3099(23)00113-5 [Crossref] [ Google Scholar]

- Hsu TW, Tsai SJ, Ko CH, Thompson T, Hsu CW, Yang FC, et al. Plagiarism, Quality, and Correctness of ChatGPT-Generated vs Human-Written Abstract for Research Paper. Available from: https://ssrn.com/abstract = 4429014.

- Huang J, Tan M. The role of ChatGPT in scientific communication: writing better scientific review articles. Am J Cancer Res 2023; 13(4):1148-54. [ Google Scholar]

- Janssen BV, Kazemier G, Besselink MG. The use of ChatGPT and other large language models in surgical science. BJS Open 2023; 7(2):zrad032. doi: 10.1093/bjsopen/zrad032 [Crossref] [ Google Scholar]

- Johnson SB, King AJ, Warner EL, Aneja S, Kann BH, Bylund CL. Using ChatGPT to evaluate cancer myths and misconceptions: artificial intelligence and cancer information. JNCI Cancer Spectr 2023; 7(2):pkad015. doi: 10.1093/jncics/pkad015 [Crossref] [ Google Scholar]

- Juhi A, Pipil N, Santra S, Mondal S, Behera JK, Mondal H. The capability of ChatGPT in predicting and explaining common drug-drug interactions. Cureus 2023; 15(3):e36272. doi: 10.7759/cureus.36272 [Crossref] [ Google Scholar]

- Kaneda Y. In the era of prominent AI, what role will physicians be expected to play? QJM. 2023:hcad099. 10.1093/qjmed/hcad099

- Kim JH. Search for medical information and treatment options for musculoskeletal disorders through an artificial intelligence chatbot: focusing on shoulder impingement syndrome. medRxiv [Preprint]. December 19, 2022. Available from: https://www.medrxiv.org/content/10.1101/2022.12.16.22283512v2.

- Kim SG. Using ChatGPT for language editing in scientific articles. Maxillofac Plast Reconstr Surg 2023; 45(1):13. doi: 10.1186/s40902-023-00381-x [Crossref] [ Google Scholar]

- Koo M. The importance of proper use of ChatGPT in medical writing. Radiology 2023; 307(3):e230312. doi: 10.1148/radiol.230312 [Crossref] [ Google Scholar]

- Kumar AH. Analysis of ChatGPT tool to assess the potential of its utility for academic writing in biomedical domain. BEMS Rep 2023; 9(1):24-30. [ Google Scholar]

- Lee P, Bubeck S, Petro J. Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. N Engl J Med 2023; 388(13):1233-9. doi: 10.1056/NEJMsr2214184 [Crossref] [ Google Scholar]

- Levin G, Meyer R, Yasmeen A, Yang B, Guigue PA, Bar-Noy T. Chat Generative Pre-trained Transformer-written obstetrics and gynecology abstracts fool practitioners. Am J Obstet Gynecol MFM 2023; 5(8):100993. doi: 10.1016/j.ajogmf.2023.100993 [Crossref] [ Google Scholar]

- Li H, Moon JT, Purkayastha S, Celi LA, Trivedi H, Gichoya JW. Ethics of large language models in medicine and medical research. Lancet Digit Health 2023; 5(6):e333-e5. doi: 10.1016/s2589-7500(23)00083-3 [Crossref] [ Google Scholar]

- Loh E. ChatGPT and generative AI chatbots: challenges and opportunities for science, medicine and medical leaders. BMJ Lead. 2023. 10.1136/leader-2023-000797

- Li S. ChatGPT has made the field of surgery full of opportunities and challenges. Int J Surg 2023; 109(8):2537-8. doi: 10.1097/js9.0000000000000454 [Crossref] [ Google Scholar]

- Liebrenz M, Schleifer R, Buadze A, Bhugra D, Smith A. Generating scholarly content with ChatGPT: ethical challenges for medical publishing. Lancet Digit Health 2023; 5(3):e105-e6. doi: 10.1016/s2589-7500(23)00019-5 [Crossref] [ Google Scholar]

- Lin Z. Modernizing authorship criteria: challenges from exponential authorship inflation and generative artificial intelligence. PsyArXiv [Preprint]. January 30, 2023. Available from: https://psyarxiv.com/s6h58.

- Maeker E, Maeker-Poquet B. ChatGPT: a solution for producing medical literature reviews?. NPG 2023; 23(135):137-43. doi: 10.1016/j.npg.2023.03.002 [Crossref] [ Google Scholar]

- Marchandot B, Matsushita K, Carmona A, Trimaille A, Morel O. ChatGPT: the next frontier in academic writing for cardiologists or a pandora’s box of ethical dilemmas. Eur Heart J Open 2023; 3(2):oead007. doi: 10.1093/ehjopen/oead007 [Crossref] [ Google Scholar]

- Martínez-Sellés M, Marina-Breysse M. Current and future use of artificial intelligence in electrocardiography. J Cardiovasc Dev Dis 2023; 10(4):175. doi: 10.3390/jcdd10040175 [Crossref] [ Google Scholar]

- Mehnen L, Gruarin S, Vasileva M, Knapp B. ChatGPT as a medical doctor? A diagnostic accuracy study on common and rare diseases. medRxiv [Preprint]. April 27, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.04.20.23288859v2.

- Mello MM, Guha N. ChatGPT and physicians’ malpractice risk. JAMA Health Forum 2023; 4(5):e231938. doi: 10.1001/jamahealthforum.2023.1938 [Crossref] [ Google Scholar]

- Mese I. The imperative of a radiology AI deployment registry and the potential of ChatGPT. Clin Radiol 2023; 78(7):554. doi: 10.1016/j.crad.2023.04.001 [Crossref] [ Google Scholar]

- Nastasi AJ, Courtright KR, Halpern SD, Weissman GE. Does ChatGPT provide appropriate and equitable medical advice?: A vignette-based, clinical evaluation across care contexts. medRxiv [Preprint]. March 1, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.02.25.23286451v1.

- Nguyen Y, Costedoat-Chalumeau N. [Artificial intelligence and internal medicine: the example of hydroxychloroquine according to ChatGPT]. Rev Med Interne 2023; 44(5):218-26. doi: 10.1016/j.revmed.2023.03.017.[French] [Crossref] [ Google Scholar]

- Nógrádi B, Polgár TF, Meszlényi V, Kádár Z, Hertelendy P, Csáti A, et al. ChatGPT M.D.: Is There Any Room for Generative AI in Neurology and Other Medical Areas? Available from: https://ssrn.com/abstract = 4372965.

- North RA. Plagiarism reimagined. Function (Oxf) 2023; 4(3):zqad014. doi: 10.1093/function/zqad014 [Crossref] [ Google Scholar]

- Oh N, Choi GS, Lee WY. ChatGPT goes to the operating room: evaluating GPT-4 performance and its potential in surgical education and training in the era of large language models. Ann Surg Treat Res 2023; 104(5):269-73. doi: 10.4174/astr.2023.104.5.269 [Crossref] [ Google Scholar]

- Okan Ç. AI and psychiatry: the ChatGPT perspective. Alpha Psychiatry 2023; 24(2):41-2. doi: 10.5152/alphapsychiatry.2023.010223 [Crossref] [ Google Scholar]

- Parsa A, Ebrahimzadeh MH. ChatGPT in medicine; a disruptive innovation or just one step forward?. Arch Bone Jt Surg 2023; 11(4):225-6. doi: 10.22038/abjs.2023.22042 [Crossref] [ Google Scholar]

- ChatGPT: friend or foe?. Lancet Digit Health 2023; 5(3):e102. doi: 10.1016/s2589-7500(23)00023-7 [Crossref] [ Google Scholar]

- Pourhoseingholi MA, Hatamnejad MR, Solhpour A. Does ChatGPT (or any other artificial intelligence language tool) deserve to be included in authorship list?. Gastroenterol Hepatol Bed Bench 2023; 16(1):435-7. doi: 10.22037/ghfbb.v16i1.2747 [Crossref] [ Google Scholar]

- Rao D. The urgent need for healthcare workforce upskilling and ethical considerations in the era of AI-assisted medicine. Indian J Otolaryngol Head Neck Surg 2023; 75(3):1-2. doi: 10.1007/s12070-023-03755-9 [Crossref] [ Google Scholar]

- Ray PP, Majumder P. AI tackles pandemics: ChatGPT’s game-changing impact on infectious disease control. Ann Biomed Eng. 2023:1-3. 10.1007/s10439-023-03239-5

- Ros-Arlanzón P, Pérez-Sempere A. [ChatGPT: a novel tool for writing scientific articles, but not an author (for the time being)]. Rev Neurol 2023; 76(8):277. doi: 10.33588/rn.7608.2023066.[Spanish] [Crossref] [ Google Scholar]

- Sabry Abdel-Messih M, Kamel Boulos MN. ChatGPT in clinical toxicology. JMIR Med Educ 2023; 9:e46876. doi: 10.2196/46876 [Crossref] [ Google Scholar]

- Sallam M. The utility of ChatGPT as an example of large language models in healthcare education, research and practice: systematic review on the future perspectives and potential limitations. medRxiv [Preprint]. February 21, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.02.19.23286155v1.

- Sallam M, Salim NA, Al-Tammemi AB, Barakat M, Fayyad D, Hallit S. ChatGPT output regarding compulsory vaccination and COVID-19 Vaccine conspiracy: a descriptive study at the outset of a paradigm shift in online search for information. Cureus 2023; 15(2):e35029. doi: 10.7759/cureus.35029 [Crossref] [ Google Scholar]

- Salvagno M, Taccone FS, Gerli AG. Can artificial intelligence help for scientific writing?. Crit Care 2023; 27(1):75. doi: 10.1186/s13054-023-04380-2 [Crossref] [ Google Scholar]

- Sanmarchi F, Bucci A, Nuzzolese AG, Carullo G, Toscano F, Nante N, et al. A step-by-step researcher’s guide to the use of an AI-based transformer in epidemiology: an exploratory analysis of ChatGPT using the STROBE checklist for observational studies. Z Gesundh Wiss. 2023:1-36. 10.1007/s10389-023-01936-y

- Sarink MJ, Bakker IL, Anas AA, Yusuf E. A study on the performance of ChatGPT in infectious diseases clinical consultation. Clin Microbiol Infect 2023; 29(8):1088-9. doi: 10.1016/j.cmi.2023.05.017 [Crossref] [ Google Scholar]

- Schulte B. Capacity of ChatGPT to identify guideline-based treatments for advanced solid tumors. Cureus 2023; 15(4):e37938. doi: 10.7759/cureus.37938 [Crossref] [ Google Scholar]

- Singh OP. Artificial intelligence in the era of ChatGPT - opportunities and challenges in mental health care. Indian J Psychiatry 2023; 65(3):297-8. doi: 10.4103/indianjpsychiatry.indianjpsychiatry_112_23 [Crossref] [ Google Scholar]

- Singh S, Djalilian A, Ali MJ. ChatGPT and ophthalmology: exploring its potential with discharge summaries and operative notes. Semin Ophthalmol 2023; 38(5):503-7. doi: 10.1080/08820538.2023.2209166 [Crossref] [ Google Scholar]

- Tang L, Sun Z, Idnay B, Nestor JG, Soroush A, Elias PA, et al. Evaluating large language models on medical evidence summarization. medRxiv [Preprint]. April 24, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.04.22.23288967v1.

- Temsah O, Khan SA, Chaiah Y, Senjab A, Alhasan K, Jamal A. Overview of early ChatGPT’s presence in medical literature: insights from a hybrid literature review by ChatGPT and human experts. Cureus 2023; 15(4):e37281. doi: 10.7759/cureus.37281 [Crossref] [ Google Scholar]

- Thorp HH. ChatGPT is fun, but not an author. Science 2023; 379(6630):313. doi: 10.1126/science.adg7879 [Crossref] [ Google Scholar]

- Haq ZU, Naeem H, Naeem A, Iqbal F, Zaeem D. Comparing human and artificial intelligence in writing for health journals: an exploratory study. medRxiv. February 26, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.02.22.23286322v1.

- Uprety D, Zhu D, West HJ. ChatGPT-a promising generative AI tool and its implications for cancer care. Cancer 2023; 129(15):2284-9. doi: 10.1002/cncr.34827 [Crossref] [ Google Scholar]

- Uz C, Umay E. “Dr ChatGPT”: is it a reliable and useful source for common rheumatic diseases?. Int J Rheum Dis 2023; 26(7):1343-9. doi: 10.1111/1756-185x.14749 [Crossref] [ Google Scholar]

- van Dis EAM, Bollen J, Zuidema W, van Rooij R, Bockting CL. ChatGPT: five priorities for research. Nature 2023; 614(7947):224-6. doi: 10.1038/d41586-023-00288-7 [Crossref] [ Google Scholar]

- Waisberg E, Ong J, Masalkhi M, Kamran SA, Zaman N, Sarker P, et al. GPT-4: a new era of artificial intelligence in medicine. Ir J Med Sci. 2023. 10.1007/s11845-023-03377-8

- Wen J, Wang W. The future of ChatGPT in academic research and publishing: a commentary for clinical and translational medicine. Clin Transl Med 2023; 13(3):e1207. doi: 10.1002/ctm2.1207 [Crossref] [ Google Scholar]

- Xue VW, Lei P, Cho WC. The potential impact of ChatGPT in clinical and translational medicine. Clin Transl Med 2023; 13(3):e1216. doi: 10.1002/ctm2.1216 [Crossref] [ Google Scholar]

- Yadava OP. ChatGPT-a foe or an ally?. Indian J Thorac Cardiovasc Surg 2023; 39(3):217-21. doi: 10.1007/s12055-023-01507-6 [Crossref] [ Google Scholar]

- Au Yeung J, Kraljevic Z, Luintel A, Balston A, Idowu E, Dobson RJ. AI chatbots not yet ready for clinical use. Front Digit Health 2023; 5:1161098. doi: 10.3389/fdgth.2023.1161098 [Crossref] [ Google Scholar]

- Young JN, Ross OH, Poplausky D, Levoska MA, Gulati N, Ungar B. The utility of ChatGPT in generating patient-facing and clinical responses for melanoma. J Am Acad Dermatol 2023; 89(3):602-4. doi: 10.1016/j.jaad.2023.05.024 [Crossref] [ Google Scholar]

- Zheng H, Zhan H. ChatGPT in scientific writing: a cautionary tale. Am J Med 2023; 136(8):725-6. doi: 10.1016/j.amjmed.2023.02.011 [Crossref] [ Google Scholar]

- Zhong Y, Chen YJ, Zhou Y, Lyu YA, Yin JJ, Gao YJ. The artificial intelligence large language models and neuropsychiatry practice and research ethic. Asian J Psychiatr 2023; 84:103577. doi: 10.1016/j.ajp.2023.103577 [Crossref] [ Google Scholar]

- Zhou J, Jia Y, Qiu Y, Lin L. The potential of applying ChatGPT to extract keywords of medical literature in plastic surgery. Aesthet Surg J 2023; 43(9):NP720-NP3. doi: 10.1093/asj/sjad158 [Crossref] [ Google Scholar]

- Zhou Z. Evaluation of ChatGPT’s capabilities in medical report generation. Cureus 2023; 15(4):e37589. doi: 10.7759/cureus.37589 [Crossref] [ Google Scholar]

- Zhu L, Mou W, Chen R. Can the ChatGPT and other large language models with internet-connected database solve the questions and concerns of patient with prostate cancer and help democratize medical knowledge?. J Transl Med 2023; 21(1):269. doi: 10.1186/s12967-023-04123-5 [Crossref] [ Google Scholar]

- Zielinski C, Winker M, Aggarwal R, Ferris L, Heinemann M, Lapena JF Jr. Chatbots, ChatGPT, and scholarly manuscripts: WAME recommendations on ChatGPT and chatbots in relation to scholarly publications. Open Access Maced J Med Sci 2023; 11:83-6. doi: 10.3889/oamjms.2023.11502 [Crossref] [ Google Scholar]

- Zimmerman A. A ghostwriter for the masses: ChatGPT and the future of writing. Ann Surg Oncol 2023; 30(6):3170-3. doi: 10.1245/s10434-023-13436-0 [Crossref] [ Google Scholar]

- Ruksakulpiwat S, Kumar A, Ajibade A. Using ChatGPT in medical research: current status and future directions. J Multidiscip Healthc 2023; 16:1513-20. doi: 10.2147/jmdh.s413470 [Crossref] [ Google Scholar]

- Levin G, Brezinov Y, Meyer R. Exploring the use of ChatGPT in OBGYN: a bibliometric analysis of the first ChatGPT-related publications. Arch Gynecol Obstet. 2023. 10.1007/s00404-023-07081-x

- Garcia MB. Using AI tools in writing peer review reports: should academic journals embrace the use of ChatGPT? Ann Biomed Eng. 2023. 10.1007/s10439-023-03299-7

- Liao Z, Wang J, Shi Z, Lu L, Tabata H. Revolutionary potential of ChatGPT in constructing intelligent clinical decision support systems. Ann Biomed Eng. 2023. 10.1007/s10439-023-03288-w

- Tripathi M, Chandra SP. ChatGPT: a threat to the natural wisdom from artificial intelligence. Neurol India 2023; 71(3):416-7. doi: 10.4103/0028-3886.378687 [Crossref] [ Google Scholar]

- Conroy G. Scientists used ChatGPT to generate an entire paper from scratch - but is it any good?. Nature 2023; 619(7970):443-4. doi: 10.1038/d41586-023-02218-z [Crossref] [ Google Scholar]

- Kleebayoon A, Wiwanitkit V. ChatGPT, authorship, and medical publishing. ACR Open Rheumatol 2023; 5(8):419. doi: 10.1002/acr2.11583 [Crossref] [ Google Scholar]

- Park JY. Could ChatGPT help you to write your next scientific paper?: concerns on research ethics related to usage of artificial intelligence tools. J Korean Assoc Oral Maxillofac Surg 2023; 49(3):105-6. doi: 10.5125/jkaoms.2023.49.3.105 [Crossref] [ Google Scholar]

- Bhattacharyya M, Miller VM, Bhattacharyya D, Miller LE. High rates of fabricated and inaccurate references in ChatGPT-generated medical content. Cureus 2023; 15(5):e39238. doi: 10.7759/cureus.39238 [Crossref] [ Google Scholar]

- Kaplan-Marans E, Khurgin J. ChatGPT wrote this article. Urology. 2023. 10.1016/j.urology.2023.03.061

- Goddard J. Hallucinations in ChatGPT: a cautionary tale for biomedical researchers. Am J Med. 2023. 10.1016/j.amjmed.2023.06.012

- Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. Nature 2023;613(7945):612. 10.1038/d41586-023-00191-1